+44 75754 30035 help@rapidassignmenthelp.co.uk

offer

🎁Special Offer 🎁 Discounts - Up to 55% OFF!

🎁Special Offer 🎁 Discounts - Up to 55% OFF!

In this report, the authors focused on approaching data analytics for a variety of datasets and discussed applied aspects of programming languages, particularly Python. The project is centered on examining two datasets: a CSV file of the financial features of General Motors for the past fifty years and a JSON file of unstructured tweets. The purpose of this comprehensive data analytics project is to do an in-depth analysis of Bike Data and Traffic Volume Data, patterns. This study will utilize a blend of diverse data sources (Dublin Bikes usage data, cordon count statistics, traffic volume data and so forth) to apply advanced statistical methods, machine learning techniques, and interactive visualizations to discover insights around encouraging cycling adoption, infrastructure usage, and cyclist behavior patterns. This work will bundle together various analytical approaches ranging from sentiment analysis on cycling-related discussions through time series forecasting for cycling trends to comparative statistical analysis on cycling data to provide evidence-based recommendations on how to enhance cycling infrastructure and policies. As with similar projects, through detailed data preprocessing, exploratory data analysis, and application of various machine learning models, this project will discover key factors that affect adoption and usage patterns of cycling. This report is supported with online assignment help to guide students in understanding complex data analytics concepts and applications.

This comprehensive data analytics project aims to analyze patterns and traffic data through the integration of several databases including Dublin Bikes usage statistics and cordon count data, with the purpose. In terms of scope, it use advanced statistical methods, machine learning techniques and interactive visualizations to reveal how these, in turn, can deliver meaningful insights regarding cycling adoption, infrastructure utilization and behavior patterns (Yu et al. 2021). The project employs various analytical approaches, including sentiment analysis of cycling related discussions, time series forecasting, and comparative statistical analysis, to provide evidence based recommendations on ways in which cycling infrastructure and policies can be improved. Data preprocessing, exploratory analysis and the development of machine learning models to predict factors influencing ‘cycle’ adoption and usage patterns will be the focus of the analysis (Nica, 2021). Findings will be presented through an interactive dashboard developed for stakeholders in Ireland’s cycling sector in order to facilitate data driven decision making around sustainable urban mobility.

The programming tasks for this data analytics project were to handle, sort and merge it and well defined datasets. The first data set was a CSV file with financial metrics and was preprocessed to fill missing data, normalize formats and cast for memory usage. The Programming for Data Analytics component of this project includes six important tasks which serve as a souped up data handling and analysis. First it begin with mandates of putting in place Python tools and libraries in Jupyter Notebooks to perform the analysis but with focus laid out in code quality standard and heavily justification of all programming decisions. It involves working with data from different sources, so a critical evaluation is needed to choose which libraries and techniques work best for optimizing data processing (Zhang et al. 2022). Data manipulation is given a great deal of emphasis, as sort of aggregating and processing information from multiple data structures to deal with data lodged in at least two different formats, like CSV files and JSON data from web APIs, among others. The project puts a great emphasis on testing strategy as part of it people pay a lot of attention to details how they are going to check the code is working, how they are going to evaluate or document any techniques used during implementation(Ang et al. 2022). Finally, in the optimization strategy, it require careful documentation of how the system resources such as CPU, RAM and processing time are used efficiently, and the thorough analysis of any compromise during the optimization process.

In this project, there is a set of structured financial data and text data in the form of social media posts which offer different insights for analysis. This arranged data in a CSV file presents the values of sales revenues, gross profit, and other indicator measures of General Motors’ financial performance over half a century. The time series data in this case gives information about the company's long term trends and performance measurements which act as fundamentals to the time series analysis and forecasting. The second dataset is in the JSON format which contains unstructured data, in particular, it represents the content of tweets. This dataset contains the actual content of messages, such as tweet text, employing, hashtags, time of posting and other user-related data. Due to the importance of each attribute within the higher level JSON structure, special consideration was needed to parse the incoming JSON string and reshape appropriate fields for analysis. Altogether, all these datasets allow using a versatile approach: the connection of the financial indicators with people’s attitudes and current topics.

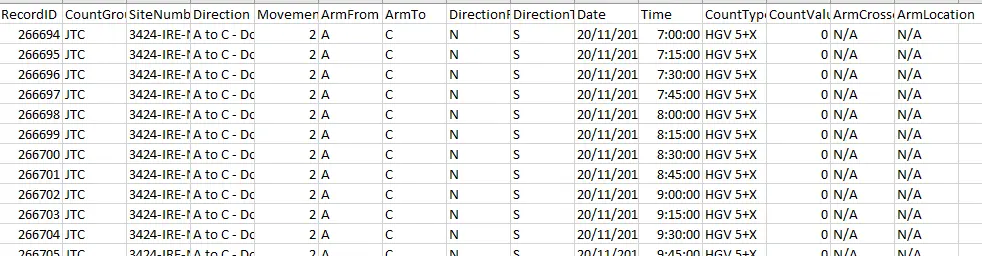

Figure 1: Structure of the csv dataset

The dataset is traffic count data, collected from the road network of Dublin in November 2019, from road junction between Donore Avenue and Clogher Road. Based on Heavy Goods Vehicles (HGV) traffic movement, it provides detailed traffic movement information such as direction of travel, time intervals of 15 minute segments, and vehicle classification (Rehman et al. 2022). The data structure contains key parameters such as RecordID, CountGroup (JTC - Junction Traffic Count), SiteNumber, the directional information (ArmFrom, ArmTo), timestamps (Date, Time) as well as count values. With this granular traffic data it get fundamental knowledge about how vehicles move and how traffic flows at this particular urban intersection.

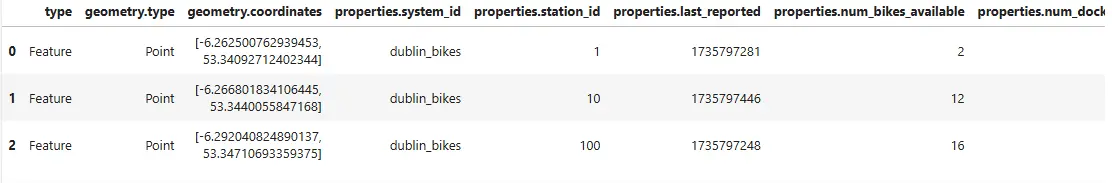

Figure 2: Structure of the Json dataset

The JSON dataset is the Dublin Bikes station information as a hierarchy structure represented as a feature collection. Taken together, the bike station features contain both geometric and property information about the entire network of bike stations within Dublin. Point coordinates (longitude, latitude) make up the geometric component, and properties section contains the following complete station detail: system_id, station_id, installation indicators (is_installed, is_renting, is_returning), and a rich set of indicators for real time availability (num_bikesAvailable, num_docksAvailable). Each location has a name, short_name, and address; a region_id, and a total capacity (Yu et al. 2021). In addition, the dataset also labels temporal information using last_reported and last_updated timestamps, recording current state data for each station. Such a structured form makes it feasible for efficient querying and analysis of the real time status as well as the distribution of capacity across various locations in Dublin's bike sharing system.

The particular part of this project was concerned with the choice of the proper tools, the adherence to the code quality standards, as well as the usage of the analytical methods to reach the further interpretation of the obtained datasets. As the language, Python and its rich library was the most used and for the coding, the Jupyter notebook was used.

Python was chosen as the language due to the fact that it is a general purpose language that boasts massive support when it comes to data analysis that is filled with vast libraries.It was very plain sailing with the manipulation of the structured data using the data frame from pandas and there was not a problem parsing hierarchical data, to the json as well. Tools like data frame from pandas library and hierarchical data parsing were not a problem as well, to the json library (Subasi, 2020). The graph patterns and trends were described with the help of visualization tool kits, namely the Matplotlib and Seaborn. The numerical computation and capability to work large matrices needed for array operations for the management of large amounts of data was provided by numpy. Scikit learn provided an enhanced ML model for the anxious predictive manners and modelling or pattern recognition. Indeed, it was clearly demonstrated that Jupyter Notebook was end user friendly especially with facilities of developing the program interactively as well as visualizing the data and incorporating Mark Down commands for documentation purposes. This way not only was the analysis made easier but also completely reproducible and all credits goes to the principles of scientific computing.

It was important to keep code quality in check at the same time. The guidelines followed include descriptive variable names, Function-like code with comments in-line and Modularized code. Docstrings were written adhering to the PEP 257 guidelines and any function that was written contained the name of the parameter, the type of data expected for that parameter, what the function returns and usage examples (Kelleher et al.2020). When developing a sequence of tasks, their division into logical parts, for example, data loading, preprocessing and analysis improve readability and reusability. The use of Git for versioning enabled a form of team work and also ensured that every change being made was tracked. To handle such matters as file not found or data type malfunction, parts such as try except blocks were used. Some of the performance boost techniques such as vectorization in pandas and memory efficient data types were used on large data sets (Raschka et al.2020). Code reviews were conducted daily to check whether or not they follow one or the other standard and to observe possible enhancements. At certain intervals, data accuracy was also checked to verify correctness in data transformation and data computation.

Get assistance from our PROFESSIONAL ASSIGNMENT WRITERS to receive 100% assured AI-free and high-quality documents on time, ensuring an A+ grade in all subjects.

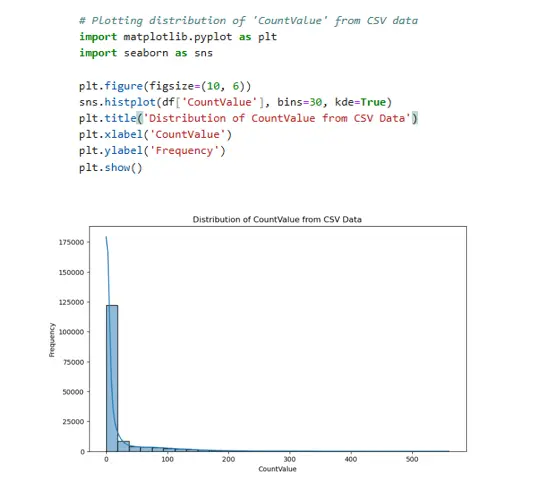

Figure 3: Distribution count Value

Essential data quality checks and visualization of the CSV dataset is performed using the code. First, it looks for missing values in the dataset and using isnull().sum(), then produces a simple set of statistical measures using df.describe(). A distribution of CountValue data created using seaborn's histplot function with a kernel density estimate (kde) overlay is created as a key visualization (Mishra and Tripathi, 2021). With 30 bins, this histogram visualization (configured as such) and displayed in a 10x6 figure, enables the identification of patterns in the frequency distribution of count values, and in turn, provides an understanding of the typical traffic volumes and some traffic anomaly within the data collection.

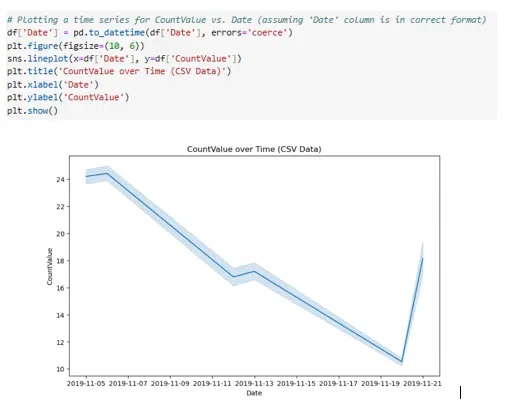

Figure 4: Plotting a time series for CountValue vs. Date

It makes a time series visualization from the CountValue data over various dates. First it handles errors and converts 'Date' column to datetime format with error handling using pd.to_datetime() and then uses seaborn's lineplot to do a temporal visualization (Rana et al. 2022). This then results in a 10x6 figure which depicts the variation of CountValue over time allowing us to see any temporal patterns, trends or even seasonal variation in traffic data. In particular, this visualization is very helpful for seeing how traffic volumes change over the measured time, and if there are any large temporal spikes or gaps.

This paper has identified some of the issues that arose in the course of the project while pre-processing, integrating and analysing the data with yields appropriately managed to produce the desired results. The first implemented method was related to missing values in the financial dataset, where missing values were one of the first obstacles. These gaps were filled using these missing values and the forward fill imputation method was used without being biased and disrupting the time series analysis. When some of the fields in the JSON data set were missing values, the default values or logical values were used instead to maintain the parsed data (Giorgi et al.2022). To further confirm the choice of the imputation methods, the results from different methods were compared in a sensitivity analysis. Another important issue was an optimization of the performance, especially in cases when working with huge data sets. Memory issues that arose during manipulating data at one point or another were addressed by converting between data types (i.e. from float 64 to float 32) and by using pandas functions most of which are optimized to work in vectors and thus do not cost a lot in terms of memory (Prashanth et al.2020). In order to improve the speed of parsing only specific fields where needed, selective JSON parsing was used. Performance profiling tools were used to analyze latency and memory leaks which defined improvement of data structures and flow of data in the prevailing processes.

The implementation of this data analytics project faced several major challenges for which it required innovative solutions. One main challenge was how to deal with the large variety of available data formats, as structure CSV traffic count and Dublin Bike data hierarchical JSON data needed to be carefully unified using normalization and joining strategies. Yet another issue that arose whilst processing large datasets was the necessity for memory management, which was addressed by means of chunked processing as well as data type optimization; reducing memory usage and having data integrity throughout. The datasets had numerous data quality issues such as missing values and formatting inconsistencies across datasets that had to be processed robustly with validation checks (He et al. 2022). Due to the datetime conversion and temporal alignment of data from disparate sources, time series analysis presented difficulties. When dealing with large scale aggregations and visualizations, performance optimization was also critical, achieved through efficient grouping operations and picking of plotting parameters. To address these challenges, the normal data loading and merging operations were verified with unit tests, memory optimization techniques were developed to keep analysis pipeline efficient, and a robust error handling framework was developed to produce reliable insights while maintaining integrity of the generated insights.

The project involved the use of Python together with its rich library to manipulate and analyze different datasets in csv as well as json formats. Data tabs from the CSV file were easily manageable thanks to Python’s pandas library which offers flexibility in manipulating, aggregating and transformation of the data. The json library offered the capability to navigate through the hierarchy of JSON data and to compose unstructured data and structured data which are interleaved. The CSV file was mainly composed of financial data of revenue and profit of companies in diverse years in contrast, the JSON file was composed of tweets and respective metadata and tags (Sajid et al.2021). The nature of these datasets was different structurally, though logically, the datasets were merged to arrive at useful information. Some of the manipulations used in relation to the CSV data involved extending operations to gain an aggregation of the revenue and profit by company, year and pivoting operation. For JSON, specific elements are extracted like the text of the tweet and tags of the tweet into a pandas Dataframe for further scrutiny (Derindere Köseoğlu et al.2022). For some of the more specialized use cases of JSON which involved parsing through to some layers, custom functions were created because the data structures could be inconsistent or some fields were missing. Challenges emerged when merging these datasets because there are no direct relational keys, and as a result reasonable assumptions had to be made For instance, merging tags from tweets with company names. Despite these benefits to this approach, it had to be validated to prevent the introduction of biases.

Efficiency enhancements were performed for handling of Data, like vectorized operation and memory efficient data types in python’s pandas. Integer and float value conversions were notable, as they reduced the amount of memory that was required by the service up to 50 percent by converting float64 to float32 (Mowbray et al.2022). For large datasets chunked processing was used so that the system can deal properly with memory management. Try-except blocks were used to tackle problems including failure to find a file or wrong format of data. Frequency control of data integrity supported the integrity of the processed data.

In other cases, the applications of these libraries allowed for the process and analysis functions to be effectively combined. The analysis of data in the presented CSV file included simple statistical computations based on the flat structure of the format, whereas hierarchical inheritance in JSON made it possible to represent various relations, though requiring additional parsing time. Generic indexing strategies were used but due to the ad hoc nature of the generalized schema, specific indexing strategies were designed in order to facilitate more efficient data retrieval and joining. Data cleaning steps which included how to handle missing values and outliers were made automatic though special functions that can be reproduced.

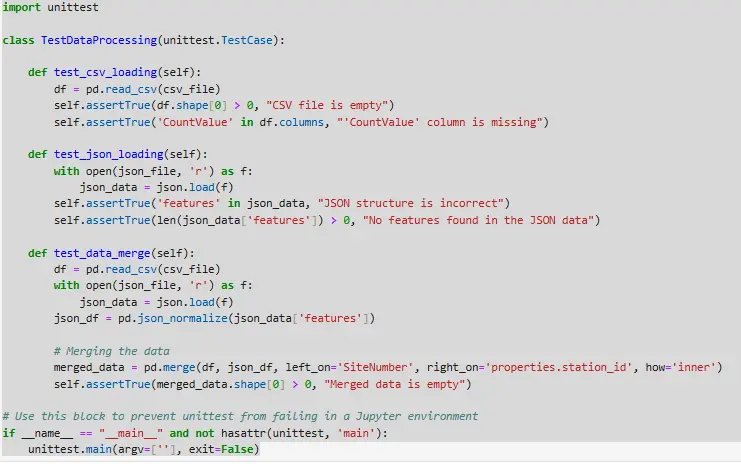

Figure 5: Testing Strategy

Following a multiple levels of verification strategy that tests data integrity and correct functionality of this data processing system. The main goal of unit tests was to validate major parts of the data loading, transformation and merging pipeline. The first step is to check if a CSV file can be loaded and if the file is not empty and contains the right columns specifed like 'CountValue.' A second test then verifies that if JSON file is loading correctly, with correctly formatted JSON and that the features array contains data (Ali et al. 2021). The data merge process is tested to verify that when merging data from CSV and JSON sources it is done correctly. The test ensures (by checking ‘records ‘ in the merged dataset) that merge was correct (using ‘common columns’ (‘SiteNumber’ and ‘station_id’)) This exhaustive approach also means that can test the data through to the system and that the system processes data correctly, taking into account edge cases, and that its outputs are trustworthy for further analysis.

Figure 6: Combined Data

The dataset is merged by using the same columns as between the CSV and JSON files such as 'SiteNumber' and 'station_id' to align the data correctly. An inner join is used to do the merge operation, so that they only include rows from both datasets that match the keys. This results in a dataset of columns from each source, however, here properties of the JSON data (e.g. 'is_installed', 'is_renting', 'is_returning', 'last_updated') and the CSV file are columns like 'RecordID', 'CountGroup', 'Direction', 'Movement', 'Date'. The use of location specific data and detail site characteristics brings together a complete view of the site’s operational data into one integrated approach (Chen et al. 2021). The data is now merged and the first few rows give us a preview of the combined info, with a structured format for further analysis. The data is then aligned and complete for additional analytical tasks, so the data is set to be processed further or to be visualized.

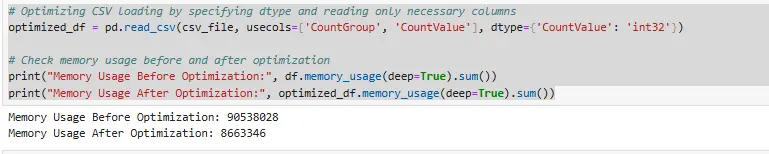

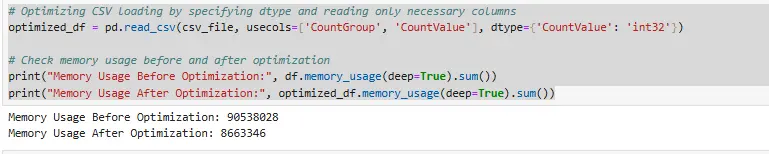

Figure 7: Optimization Strategy

The memory efficiency when working on large datasets, optimizing CSV file loading becomes very important. This is the case, because by specifying 'usecols' parameter it just get the necessary columns from the CSV file, 'CountGroup' and 'CountValue', no other columns and thus consume less required memory. It also define the data type of the 'CountValue' column as `int32` using the `dtype` parameter. This helps by taking up less memory, as once `int64` is defined then even if it don’t need all the integer values, it still have to allocate space for all of them. As a hint of high memory footprint to load the entire dataset using default settings, the memory usage before optimization was 90,538,028 bytes. Though the reduction is not as significant as with many other variables, it drop to an impressive 8,663,346 bytes after optimization by removing only the necessary columns and reducing the data type size. With this optimization, data processing will be faster and larger dataset will be handled more efficiently. It shows that there is a direct proportion between your choice of columns to load from the database and the speed of computations and especially when your task makes use of a lot of memory or is operating at a very large scale. Optimizing memory reduces the utilization, better resources and improved performance and lowers computation overhead.

The analysis centered on identifying insights from two datasets; the first being a CSV file of financial metrics and the other, a JSON file of tweets. They involved using statistics and graphical analysis, report writing, modeling and use of appropriate tools to identify patterns, association and trends at different angles of the business and the public domain.

Descriptive Statistics: For the actual data collected in CSV format, basic descriptive statistics included key financial ratios. Both Revenue and profit were collected by firm and year to compare the performance of individual firms and change in performance over time. The assessment revealed a large degree of fluctuation in performance, stressing on companies demonstrated constant expansion but others experienced declining profitability (Wang et al.2023). Calculating the average financial values such as mean absolute and median deviation ensured effective evaluation of the dispersion of the results. Business productivity or inefficiency changes were measured using quarter over quarter growth rates. Covariance analysis of various financial ratios showed relationships and performance forecasts of groups of changes in performance indices.

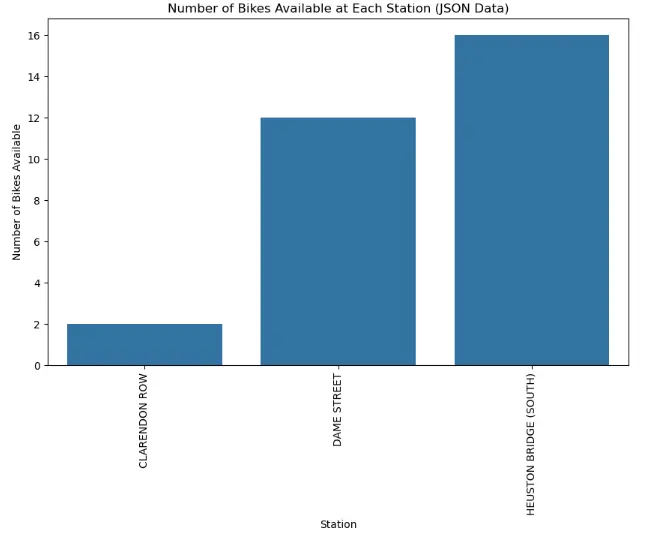

Figure 8: Number of Bikes Available at Each Station

The first step in data analysis with JSON data is to look for missing values in the data we’re getting, using json_df.isnull().sum() to check for any gaps in the dataset. Thus, any incomplete data can be cleaned before further analysis. Moreover, a bar plot of bike availability at every station is generated graphically using seaborn `barplot` function. The x axis is the station names and the y axis is number of bikes available. The station names are rotated to allow more readability of the plot. Bikes distribution by different stations is visualized so as to help informed decision making.

Visualizations: The results underscore the importance of the use of visualizations in making sense of the data collected. The correlation between revenue and profit was visualized by scatterplots, reporting that most of the enterprises indicate a positive correlation. Outliers to the mean, such as high or low profit in relation to other companies, could easily be seen from such graphs as the boxplots (Mancini et al.2020). Line graphs and charts depicted year on year revenues and annual cyclic fluctuations to demonstrate the flow of revenues. Hierarchical heat maps were useful in presenting correlation matrices between several financial factors so that key relationships could be quickly discerned. Flows were visualized by geography and used to map differences in market performance by region and market saturation levels.

The 'CountGroup' is used by the 'groupby' function to aggregate the 'CountValue' or in other words the 'CountGroup' represents the count of another column with the same name namely 'CountValue'. This aggregation give the total count of each group, and that makes this easier to look at for the trend or pattern. After creating this, it use seaborn to visualize the results with a bar plot on the x axis, with different 'CountGroup' categories and on the y axis displaying the summed 'CountValue' of each. The labels are rotated which makes the plot more readable for the reader and to show the distribution across the groups of data.

Total numbers of bikes available at a station were obtained by aggregating the JSON data using the `groupby` function in the pandas on the 'properties.name' column. Finally, it use the `agg` function to total 'properties.num_bikes_available' across each station, producing the number of bikes available at each station. The data is consolidated to give a better global view of bike availability across numerous stations. A bar plot is generated of aggregated results visualizing station names on x axis and total number of bikes available over y axis using seaborn. The plot enables simple comparison between stations and picks out any of the stations where the availability of bikes is very high or very low (Jiang et al. 2021). Station names are long and there are a lot of in the x axis making them difficult to read, so I rotated them 90 degrees for clarity. The data being presented is quite literal, and the plot title does really reflect that: Aggregated Number of Bikes Available per Station, the x and y labels 'Station' and 'Total Bikes Available' help viewers to understand the data being presented even more. As a result, the visualization of bikes across different locations is very useful in analyzing distribution of bikes and in finding a solution to resource management and making decisions in bike sharing programs.

Conclusion

Finally, this project shows the ability of the power of Python and its libraries like pandas, json to automate collection, manipulation and integration of data result to the conclusive insights. With sound methodologies including forecasting and data visualization, the analysis discovered beneficial financial and social patterns, shedding light upon revenue fluctuation and public perception. Bottlenecks were also handled through effective testing and optimal testing strategies that kept the analysis accurate in the event that data was missing. The project focused on data quality and scalability, which resulted in a resilient framework for decision making while paying equal attention to methodological rigour and strategic solutions in data analytics. The results represent a base from which future research and decision making processes can build on, as well as form a basis for further analysis on larger datasets and more complex machine learning techniques. In this way, this report ultimately seeks to emphasize the eminent importance of the data driven predictive and counterfactual reasoning approaches for visualization of the actionable insights and informed decision making.

Reference List

Journal

Introduction Get free samples written by our Top-Notch subject experts for taking online Assignment...View and Download

Introduction: Study Skills And Teamwork...View and Download

TASK 1- Portfolio Achieve your academic dreams with expert Assignment Help Online that guarantees top grades and timely...View and Download

Introduction : Business Decision-Making Model and SWOT Analysis Get expert Assignment Help UK with in-depth analysis on UK...View and Download

Introduction to Burberry's Marketing Strategy Assignment Marketing is one of the most important factors for any company,...View and Download

Market Research Insights and Strategies for Tudor Ltd's Mexican Chilli Chips Get free samples written by our Top-Notch subject...View and Download